Zhen Xu

Zhejiang University

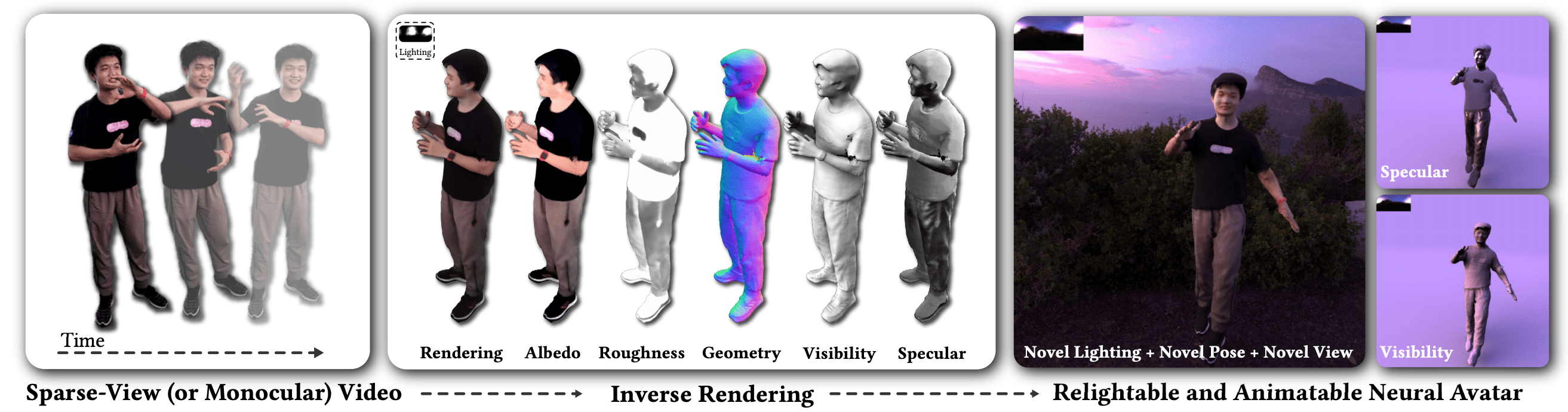

S1E04 - Relightable and Animatable, Neural Avatar from Sparse-View Video

Speaker

Zhen Xu is a second-year Ph.D. candidate at Zhejiang University, where he is deeply engaged in the study of Computer Graphics/Vision, with a particular interest in 3D technologies. Despite the vastness of the internet, Xu is delighted to connect with those who find and take an interest in his work, emphasizing a mutual care and appreciation for his audience.

His passion for the field is evident through his active involvement in managing private repositories on GitHub, which mainly consist of course and research projects. Currently, Xu is dedicating his research efforts towards 3D/4D neural reconstruction and rendering, as well as digital humans, demonstrating a commitment to advancing knowledge in these cutting-edge areas.

Talk: Relightable and Animatable, Neural Avatar from Sparse-View Video